Code and AI: Emerging Patterns

The world of computing stands at a fascinating crossroads where traditional programming meets artificial intelligence, creating a new landscape of possibilities and challenges. At its core, this transformation represents a shift from rigid, predictable systems, to adaptive, human-like problem-solving ones.

The Old Guard: Classical Programming's DNA

Classical programming has, until recently, always been the only option for harnessing digital logic. It's like a well-organized recipe book - every instruction must be precise, every ingredient (input) must be exactly as specified, and if you follow the steps correctly, you'll get the same cake every time. This code operates in a world of strict rules and expectations, where deviation from the prescribed format typically leads to errors or unexpected results.

AI: The Adaptive Newcomer

In contrast, AI models, and in particular Large Language Models (LLMs), operate more like an experienced chef who can work with whatever ingredients are available and still create something delicious. They can handle natural language instructions and unstructured inputs with remarkable flexibility. Let's look at a practical example:

Imagine you need to extract appointment details from emails. A classical program would need carefully formatted input like:

```

Date: 2025-03-25

Time: 14:00

Location: Office

```

If the inputs are slightly off, you might be able to apply some regex expressions to format it, but this approach will have its limits.

In contrast, an AI can handle something as casual as:

"Hey, let's meet next Tuesday afternoon around 2-ish at the office to discuss the project"

The Human Connection

It's no coincidence that AI's approach feels more natural to us. Human intelligence operates similarly - we're naturally equipped to handle ambiguity, interpret context, and adapt to unexpected situations. We don't crash when someone makes a typo or speaks in broken English; we adapt and understand.

Complementary Strengths

It would be wrong, however, to assume that the AI approach is an unmitigated improvement on classical code. Both have their strengths and weaknesses so tt is important to consider where and when to use AI versus code.

Classical Code's Superpower:

1. Transparency: Like a glass-walled machine, you can see every gear turning

2. Predictability: It follows rules religiously - no surprises

3. Security: Every action can be audited and controlled

4. Performance: Lightning-fast execution and far lower energy coinsumption compared to AI inference

AI's Magic Touch:

1. Natural Language Processing: It speaks human

2. Capturing Meaning: It understands the meaning of code, text and other content

2. Flexibility: Handles messy, real-world inputs gracefully and effortlessly

3. Multilingual Capabilities: Understands and translates between multiple languages

4. Problem-Solving: Can reason, ideate, and evaluate solutions on its own

6. Multi-modal Processing: It can interpret, create and transform all manner of content

The Future is Hybrid: Emerging Patterns of Integration

The fusion of classical programming and AI is giving rise to sophisticated hybrid architectures that leverage the strengths of both paradigms. This isn't just about using both approaches side by side. It is about identifying which paradigm is best suited for a particular task. How important is transparency? What are the desired outputs? Is creativity desired? Does the task involve natural language? How fast or energy efficient is the task at hand and what hardware is available to perform it? Often it makes sense to combine the two approaches at a much more granular level, so that AI and classical code work together, creating synergistic systems where each component enhances the other's capabilities.

Here are some common high-level patterns at the crossroad of AI and classical code:

1. Using AI to work on classical code

At the moment, one of the hottest AI use cases is performing classical code development tasks. It is already possible to develop powerful classically-coded applications in a fraction of the time, using AI Models trained on code from any number of different programming languages. This approach combines:

AI's ability to understand natural language requirements, as well as the code that can fulfill them

The reliability and performance of classical programming

Automatic code generation with human oversight

Built-in documentation and explanation capabilities

2. AI Agents using classical code

AI agents can accept natural language instructions from humans, reason about how appropriate solutions could best be delivered, and then coordinate the performance of those solutions, in whole or in part by employing classic code. For example, the Model Context Protocol (MCP), recently developed by Anthropic, represents a crucial bridge between AI and classical systems. It provides:

Standardized communication protocols between AI and external tools

Real-time data access for context-aware decisions

Bidirectional communication enabling AI to both gather information and trigger actions

A framework for adding new tools and capabilities

3. AI sandboxed by classical code

Because AI, for all its magic, has the potential to "go off the rails", behaving in undesired or unexpected ways, it can be very useful to limit its range of possible outputs by using classical code. For instance, using type-safe programming to ensure AI-generated responses conform to predefined structures, or implementing validation functions that check if AI outputs meet specific criteria before being used in production. This combines the flexibility of AI with the reliability of classical programming safeguards.

AIs access to resources can also be controlled by making the AI resource access go through classically coded gateways that employ classic authentication and authorization, cryptographic proofs, etc.

4. AI agents as a participants in a consensus

Extending the previous point, the cooperation of multiple agents (AI and human), possibly from different organizations and representing different interests, can be facilitated by using classically-coded consensus protocols.

Recent developments show DAOs (Distributed Automous Organizations) incorporating AI agents as representatives of different stakeholders, where agents participate in governance through smart contracts and automated consensus mechanisms. These AI-driven systems can negotiate, vote, and execute decisions while representing diverse interests, with blockchain ensuring transparency and accountability. Some platforms like ChaosChain are already implementing AI-driven agent governance where multiple AI models represent competing interests in decision-making processes.

Business AI Initiatives Should Focus On Use Cases First

Choosing an AI technology stack is getting more complicated. Not a day goes without the announcements of new AI models, tools and frameworks. Whichever ones you choose to power your business AI initiatives, keep in mind that you are likely to be using a much better AI tech stack next year and again the year after that.

Rather than getting bogged down in the specifics of today's AI models and platforms, businesses should start by focusing on understanding how AI, in general, can create value for them. This means being aware of what capabilities are on offer and how these could be applied to improve existing business processes. Where are the bottlenecks and inefficiencies? What tasks are eating up valuable employee time and attention? How can the organizations products or services be made more valuable to its customers? As the business grows and the competitive landscape shifts, what new business process capabilities will be required to get or stay ahead? Answering these questions will highlight areas where AI may be able to drive significant improvements.

Once potential use cases have been identified, it's important to prioritize them based on their alignment with key business objectives. Not every application of AI will be equally impactful. Businesses should focus their efforts on the use cases that have the greatest potential to move the needle, whether that's through cost savings, increased revenue, improved market insights or customer experience. It's also critical to validate the feasibility and potential ROI of each use case before investing significant resources.

With high-value use cases identified, attention can turn to the data required to power them. Many businesses are sitting on troves of data that they aren't fully utilizing. Dormant data represents an untapped opportunity to drive value with AI. Organizations should take inventory of their data assets across different functions and consider how this information could be leveraged.

Data collection should also be thoughtfully integrated into business processes. By capturing the right information at the right time, businesses can ensure a steady flow of data to feed their AI initiatives. Of course, all of this data must be properly organized and governed. Sound data management practices are foundational for successful AI deployment.

In addition to data, another key area where businesses should focus their efforts is prompt engineering. The prompts used to interact with AI models have a direct impact on the quality of outputs. Rather than starting from scratch with each new use case, businesses should develop a library of reusable prompts that are known to elicit good results. Prompts should be regarded as integral part of defining business processes.

By developing a robust pipeline of use cases, ensuring the right data is in place to support them, and crafting prompts that can stand the test of time, organizations can create a strong foundation for their AI initiatives. This foundation will serve them well regardless of which specific AI models and tools they end up using. As new and improved models become available, businesses will be well-positioned to take advantage of them. They'll be able to plug these models into their existing use cases and data, realizing the benefits of the latest technology without starting from scratch.

Combo Shots, AGI & The Diminishing Returns Of Intelligence

Intelligence is predictive power. Therefore, shouldn’t a radical increase in intelligence through AI technology also radically increase our ability to make predictions? Intuitively, it may seem obvious that the answer is yes, but the reality is more nuanced. I am fully onboard that AI will have a huge influence on our collective future, but the hype surrounding the development of Artificial General Intelligence (AGI), which is predicted by many to occur over the next few months or years*, often overstates the impact that we can expect from it. In this article, I explain why by using the game of pool in general, and combination (combo) shots in particular, as an allegory for how even super-intelligent AGI may not fare that much better than humans at making complex predictions.

Anyone who has played a little pool is familiar with the fact that combo shots are very difficult and their difficulty increases exponentially as the number of intermediary balls, and the distance between them, increases. In case you are not familiar with combo shots in pool/billiards, please see the short description at the end of the article.

The necessary angle with which the cue ball hits the first object (colored or striped) ball, in order to make a successful shot, can be estimated by sight or calculated in detail. In the platonically ideal world of mathematics, if the cue ball is struck at the correct angle (i.e., precisely 0° error), the shot will be successful no matter how complex it may be, and regardless of how many combinations are attempted. This ideal circumstance does not carry over into the real world because it assumes that the player has perfect aim, the cue is perfectly straight, the billiard table surface perfectly even and clean, and the balls perfectly spherical - or equivalently that the correct angle takes any variations perfectly into account, including factors such as angular spin, air resistance, ball and surface friction, and possibly even quantum level variations. Alas, in the real world, even the tiniest error, even if immeasurably small, is highly likely to cause the shot to fail if there are more than a minimal number of intermediate balls.

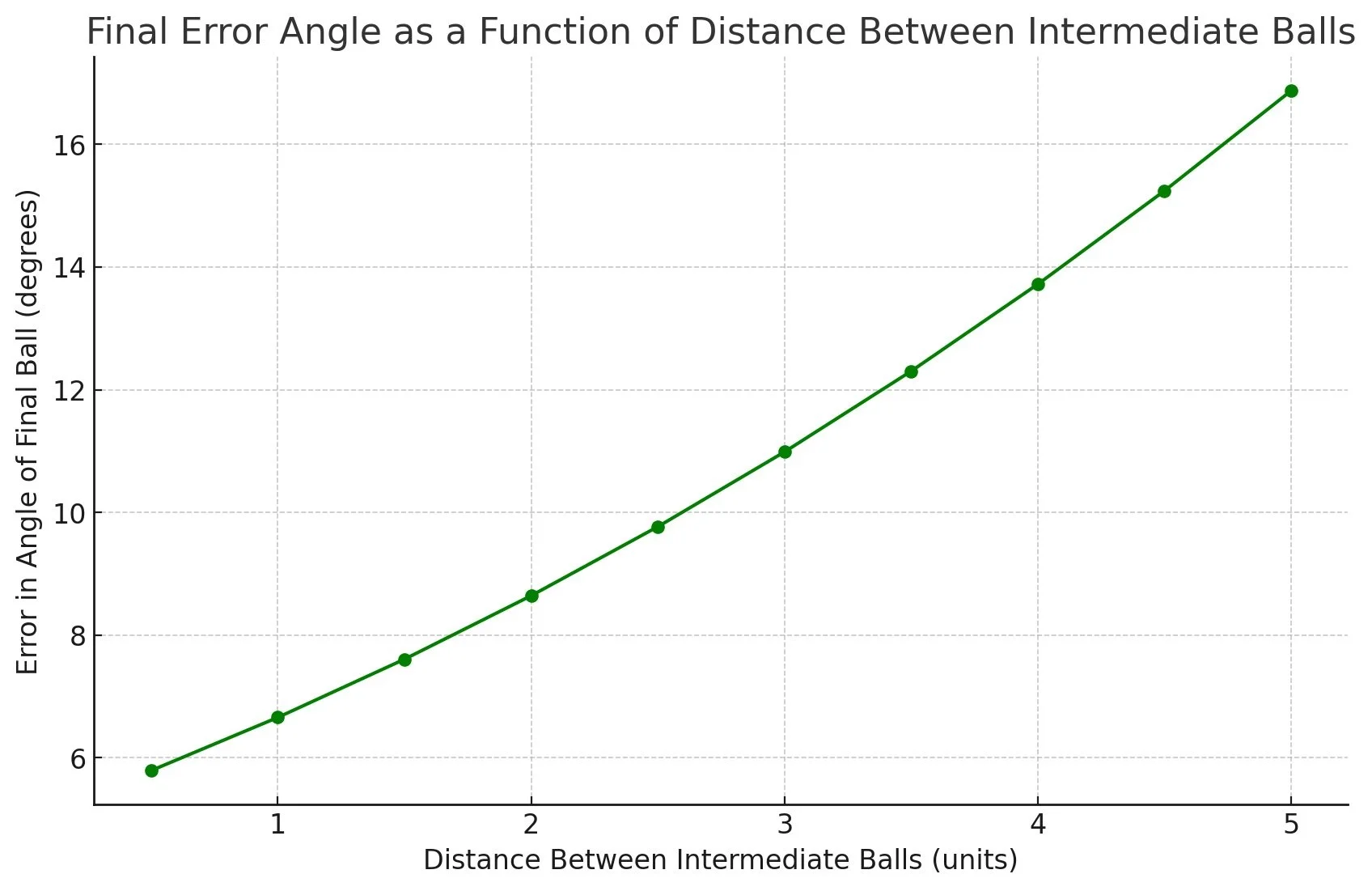

Indeed, as the number of intermediate balls in a combination shot increases, the final error in angle increases exponentially relative to the initial error.

The distance between the balls also multiply the increase in final error.

Complex problems with multiple interdependent variables and a large range of potential outcomes, like combo shots, can be exceedingly difficult to solve, regardless of how much predictive power (i.e. Intelligence) we have at our disposal. Undetectable variations in measurements and causal relationships can have a very material effect on outcomes. The difference between a pool hall amateur and a world champion will be very small when it comes to elaborate combo shots. The pro player might manage to combine with one or two additional intermediate object balls compared to the amateur, but can not realistically hope to achieve much more than that. Entropy is a powerful equalizing force with respect to predictive power.

So what does this have to do with AGI?

AGI, or artificial general intelligence, is generally defined as AI having the ability to understand, learn, and apply its intelligence to a wide variety of problems, much like human beings.

As large-language models exploded into the public consciousness over the past couple of years, their impressive new capabilities have fuelled speculation that humanity is on the verge of achieving AGI. This is considered to be very important because whenever AGI does emerge, it is likely to be followed by so-called super-intelligence shortly thereafter, which could represent an existential threat to humanity should it pursue goals misaligned with humanity’s interests. Some even go as far as suggesting that super-intelligent AI agents may regard humans in a similar way to how humans regard animals such as dogs or even ants - the idea being that they would be so vastly more intelligent that we could not begin to understand their behaviours and motivations, just as animals cannot hope to understand ours. This is a fallacy.

It makes sense to be cautious about AI development for many reasons, and AI/AGI will no doubt have an extreme impact, for good or bad, on the human condition. However, the doomer perspective on AI frequently falls prey to magical thinking by failing to recognize that increases in the quality of intelligence, will eventually have rapidly diminishing returns. The idea that superintelligent AI will have God-like powers is predicated on the idea that it will do vastly better than humans at making and capitalizing on hard-to-make predictions. Yet what we know about certain classes of complex problems, like making combo shots in pool, is that intelligence/competency only make a difference up to a certain point. Many of our current limitations with regards to predictive power are not related to our limited intelligence, but to the nature of chaos, that is, the ultra-high sensitivity to initial conditions. Thus, even for the most advanced super-intelligent agent, we may find that the effective advantage for making predictions in the context of real-world dynamical systems is surprisingly limited.

The real power of AI, and its impact on human life, is likely to manifest not through greatly better predictive power from super-intelligent AGI, but rather, through the massive scaling and acceleration of more moderate, that is human comparable, levels of intelligence / predictive power. Returning to our pool analogy, AI will allow us to pocket many more balls by taking many more simple shots.

Description of combination shots in pool/billiards: In the game of pool, a shot is taken by striking the cue ball with a cue stick. The proximate objective is to have the cue ball strike and pocket a targeted object (colored) ball. The key to pocketing an object ball (i.e. getting it to fall into a hole) is to have the cue ball strike it at the correct angle requiring predictive power (understanding of angles, spin, etc.) and dexterity. In some situations, due to the placement of the balls, it may be a good strategy to make a combination (a.k.a. combo) shot. A combo shot consists of having the cue ball first hit an intermediary object ball, which then strikes a target object ball, ideally pocketing it. In theory, a combo shot may have multiple intermediate object balls before striking and pocketing the target ball.

Intelligence & The Ladder Of Causation

If intelligence is defined as the ability to acquire and apply information, then an important question becomes: application to what? If information is to be applied, it must be in support of some objective. An intelligent agent applies knowledge for the purpose of causing some desired outcome. The cognitive model that informs its actions can therefore be considered to be a causal model.

In The Book of Why, Judea Pearl introduces the concept of the ladder of causation that describes three levels of cognitive ability that are needed in order to fully understand causality. The three levels are association, intervention and counterfactuals.

The first level, association, consists of observing and detecting patterns of correlation between the elements in a system. At this level, one can recognize that the sunrise coincides with the crowing of the rooster, but it can not be determined which of these phenomena caused the other, or indeed whether a third event caused them both. Most of modern statistics, AI and big data rely largely on correlation and are therefore not able to directly identify causal relationships.

The second level, intervention, permits establishing causality between particular variables in a system by performing empirical experiments. If B happens every time I do A, then I have established that A causes B.

The third level, counterfactuals, permits the inference of causality based only on a causal model. Counterfactuals are like thought experiments in which a causal model is used to determine what would happen, if particular interventions to a system were to be performed, or what would have happened had some variables been different. This level allows us to successfully ‘predict’ counterfactual realities, provided that a sufficiently representative causal model has been established.

There is a direct relationship between Pearl’s ladder of causation and the concept of gene-level and organism-level cognition which I discussed in my previous article titled “All Life Is Intelligent”.

What I coined gene-level cognition is a form of intelligence, defined as “the ability to acquire and apply information”, that is produced entirely by evolutionary processes which are a priori to the organisms that possess it. In other words, while an organism with gene-level cognition can acquire and apply information, it does so based on established causal relationships which have, in effect, been encoded in its genes. The establishment of the causal relationships that determine its behaviour take place in advance, during the evolutionary process that leads to its particular configuration. Gene-level cognition drives purely instinctual or reflexive behaviours. In relation to Judea Pearl’s ladder of causation, gene-level cognition falls squarely into the second level: intervention. That is, evolution establishes causation by performing empirical experiments in the form of random mutations and selective pressures within an organism’s ancestral environment.

Organism-level cognitive models are formed, as the name suggests, within the organism. When an organism learns through imitation or experience, it is creating an organism-level causal model. Brains evolved to provide organisms with organism-level cognitive modelling abilities. Once an organism has developed a causal cognitive model, it can deal with novel circumstances to the extent that its learned model is applicable. In terms of Pearl’s ladder of causation, learned cognitive models permit the use of counterfactual thought experiments to drive behaviour.

Intelligence Is All About Predictions

Intelligence is the ability to acquire and apply information. To acquire information means to incorporate it, or contextualise it, into a cognitive model. To apply information means to use a cognitive model to make predictions.

The role of cognition, indeed the reason for which it evolved in humans and to varying extents in other animals, is to guide behavior in such a way as to maximize the prospect of survival and reproduction. At its essence, cognition simply involves the processing of sensory data from the environment to make predictions and to implement effective behaviors.

Some might claim that this view of intelligence is too simplistic. A popular theory is that of multiple intelligence, put forward by Howard Gardner in his book Frames of Mind (1983), which holds that what we call human intelligence is not a single phenomenon, but rather a number of independent cognitive modalities: logical-mathematical, verbal-linguistic, musical-rhythmic, body-kinesthetic, interpersonal, intrapersonal and naturalistic. However, while I agree that there are indeed many types of intelligence, all of them ultimately involve the processing of information in the service of making predictions to guide behaviours of one sort or another. For example, an expert orator can effortlessly predict the subtle meaning his improvised words will convey. A trained guitarist can predict the sounds that will be made by his instrument based on subtle variations in the movements of his fingers. An expert acrobat can predict with uncanny accuracy how the movements of her body will carry her through space.

What makes it possible for intelligence to exist, in whatever form, is the presence of a predictive model. Virtuosity at any cognitive task is reached by the development, through practice or repetition, of a highly effective, i.e. predictive, behavioural model. Even the ability to solve novel problems is a cognitive skill which is developed by repetitively solving other novel problems. We learn to solve novel problems, within a domain or field, by learning to recognise and incorporate the most relevant, or predictive, available information into a predictive model.

Models are key to intelligence because they make it possible for us to make sense of the world that we observe. In order to interact with the world, we need to anticipate it. We need to place our observations into context, recognize patterns and extend them into the future. We need predictive models to run alternative scenarios in order to select those behaviours that we expect will lead to the outcomes we hope to achieve.

A prediction need not always concern the future. For example, the investigation of a crime involves creating a model using known information to determine unknown information. It is just like making a prediction even though it concerns events which temporally are in the past. The true essence of intelligence is to decrease our uncertainty about the unknown by applying models developed from the known. The future is the most common source of uncertainty, but an obfuscated past is an equally valid target for making predictions.

All Life Is Intelligent

The expression intelligent life is usually applied to classify life-forms, such as humans, which are self-aware, use language, and like to philosophise about free-will. We use it most often in the context of our musings about whether intelligent (i.e. human-like) beings exist elsewhere in the Universe and whether we will one day be able to engage with them. Our anthropocentric perspective on intelligence has had the unfortunate effect of muddling our understanding of what it is and why it exists.

Intelligence, if we define it simply as the ability to acquire and apply information, is a fundamental characteristic of all living things. Living organisms, which are at their essence made of inanimate matter, are so named because they assimilate and organize matter and energy in the service of pattern self-replication. In order to engage and adapt to the environments in which they exist, they must necessarily collect and process, that is acquire and apply, information.

Certainly there are profound differences with regards to the intelligence of different species, but no living organism is entirely devoid of the ability to acquire and apply information. Every living organism interacts with its environment, absorbing and transforming matter, heat and light into energy and biomass according to its inherited genetic blueprint. Even the simplest unicellular life-forms perform amazingly intricate behaviours upon which their survival and reproduction are dependant. These behaviours are anything but random. They are guided by a kind of primordial intelligence that allows the organism to act on relevant information, sensed in its environment and within its own body. When a living organism senses the presence of a resource that it needs or a threat that it is facing, and reacts accordingly by moving towards the resource or away from the threat, it is exhibiting a very basic form of intelligence. That is, it is acquiring and applying information, if only the knowledge of the presence of said resource or threat.

At its essence, intelligence is about making predictions in the service of selecting and implementing behaviours that lead to preferred outcomes. The primary preferred outcomes driven by natural selection are survival and reproduction, but any preferred outcome suffices to motivate intelligence. However, without a preferred outcome, the concept of intelligence is meaningless. If all outcomes are of equal value to an agent, then its behaviour does not need guidance. This is why intelligence is a quality that we only attribute to living things: only living things can prefer, teleologically speaking, one outcome over another. By prefer, I do not mean to imply that all living things are capable of having subjective conscious experiences, but simply that they consistently behave in such a way as to favour certain outcomes, which are generally those that favour their survival and reproduction.

Is an AI a life-form?

Humans have always extended their ability to acquire and apply information by developing tools, from stone implements to ever more advanced AI systems. The forms that our tools take are shaped by our desires, i.e. our preferred outcomes. Necessity really is the mother of invention. Tools, including today’s most advanced AIs want nothing beyond that which their creators want. They are very much a part of us. The fact that they are made up, not of human cells, but of inanimate matter, makes no difference. The atomic building blocks at the root of our biology is just as inanimate as a stone implement or a silicon chip. Life is not about matter. It is a deeply integrated pattern of replication and change, which was set in motion more than 4 billion years ago and continues to expand outwards like a concentric wave on the surface of a pond. Artificial computation from a calculators performing simple algorithms to the most advanced AIs are alive in the sense that they extend us. To the extent that we endow them with their own preferences about future outcomes, they will be life-forms in their own right.

If all life-forms are intelligent, then why do only certain organisms have brains?

A plant has no brain, and therefore no internal cognitive map of its environment which it can navigate to understand causal relationships and improvise strategies based on changing conditions. And yet, it perceives light and reacts by bending towards it. Plants, microbes and other brainless organisms, inherit a kind of virtual cognitive model that is encoded in automatic, i.e. instinctual, reactions, developed through natural selection. In a sense, a light seeking plant’s virtual cognitive model “tells” it to bend towards light, but the proximate cause of its behaviour is simply that it is equipped with light receptors that trigger certain inherited behavioural routines. By virtual, I mean that the instinctual cognitive-model does not have a full abstract representation physically present inside the plant, unlike the cognitive-models inside a human brain, which are represented through patterns of neural connectivity. The virtual model exists only through the mark left on the plant’s inherited anatomy by hidden relationships between its ancestors and its ancestral environment, a mark painted by the coarse brush of life and death. The importance of photosynthesis for the plants’ survival exerted selective pressure on its ancestors. Those which, through mutation, accidentally stumbled upon the beneficial behaviour of bending towards light survived more often than those that did not. Over many generations, the benders took over. So, while an individual plant cannot learn in any important sense*, plant genes are able to learn behaviours such as bending towards light.

Since we humans are organisms, we tend to take an organism-centric view of life. We regard genes as the means by which organisms reproduce. We could just as easily consider life to be centred on genes, with organisms being the means employed by genes to reproduce. From this perspective, the virtual cognitive model discussed above underpins a kind of gene-level cognition.

More complex organisms, including humans, also have plenty of behaviours that are driven by gene-level cognition. We have thousands of bodily reflexes such as those that regulate our breathing, blood circulation, metabolism, cell division, temperature, acidity and much more, which occur subconsciously and are not subject to abstract causal analysis, prediction or decision making at the organism level. However, the presence of a nervous system and brain allows humans, as well many other complex organisms, to adapt some of their cognitive information models, to varying degrees, without having to wait for selective pressure to do its work. These cognitive models don’t just react to information acquired during the lifespan of the organism, but are also directly shaped by it. The advantage of brains, and the extemporaneous organism-level adaptive cognitive models that they bring with them, is that learning can occur at the level of the organism, which means that the resulting behavioural adaptations are many orders of magnitude faster than those from gene-level cognition, i.e. natural selection. Of course, it should also not be forgotten that this higher form of cognition also came about through natural selection and is thus itself a product of gene-level cognition.

The behaviours generated from extemporaneous organism-level cognitive models are based on predicted causal relationships in an individual organism’s environment. These intelligent behavioural changes are more likely to bear fruit than ones based on blindly applied random mutations. They also make it possible for organisms to engage in more complex behaviours. There are limits to the complexity that can be managed through a purely instinctual, gene-level, cognitive model. For example, many cognitive functions in humans need to be calibrated through information feedback loops before they can be used effectively. Examples include vision, motor function and language which each require months or even years of trial and error, i.e. feedback-driven learning, to fully develop. By definition, the learning and calibration of cognitive function through environmental feedback at the temporal scale of an organism, is only possible when cognitive-models can adapt at the level of that organism. Such learning is often accompanied by complementary non-cognitive physiological development. Learning to walk or speak, for example, are complex, feedback-driven, processes in which cognitive and physical developments occurs in tandem, influencing one another along the way.

Thus, the primary difference between gene-level and organism-level cognition is the granularity and speed with which feedback, i.e. information, can be processed. Gene-level learning (a.k.a. natural selection) simply creates random variations in organisms through mutation, and then allows the environment to select, through a life and death feedback process, which variations to retain. This takes time, and any information acquired by an individual organism cannot be processed unless it has a direct impact on its survival and reproduction. Extemporaneous organism-level learning, on the other hand, is fast, and an organism that has it is able to make constructive use of detailed feedback information by physiologically creating, maintaining and navigating an abstract map of its environment, complete with causal relationships that allow it to adapt its behaviour during the course of its life.

*There is some evidence that plants are capable of some basic experience-based learning.